In this article, I’ll write about the freedom of speech, freedom of thought, science – and censorship, as their great enemy. I’ll start with a bit of retrospective, and then explain the reason, the “event” that got me thinking and writing this.

In a separate article, I discussed the topic of Theory vs Practice.

Table Of Contents (TOC):

- Website and YouTube channel financing

- History of science, and scientific consensus

- Censoring free speech

- Video on critical thinking and Internet information

- Update: Google Gemini AI

1. Website and YouTube channel financing

This website (and BikeGremlin Youtube channel) gets most of its costs covered thanks to Google AdSense support (my Google AdSense experiment – update 2024: I’ve switched to Mediavine). The principle is simple: Google charges companies for showing their ads, I allow those ads to be shown on my websites and YouTube channels, and Google gives me 50% to 70% of what they had charged.

Unlike most other companies, Google saw value in what I do as soon as I had published some 50 high-quality articles and recorded about 100 educational videos. The Google AdSense earnings have enabled me to cover all the website hosting costs and buy some remotely-decent recording equipment. I’m grateful for that.

It’s a very fair deal. Google takes care of getting advertisers, charging, calculating the number of shown and clicked upon adverts, and I get my share without any hiccups, late payments, or other problems. Google Adsense is a set-and-forget system as far as content creators are concerned – letting us concentrate on what we do best.

This, among other things, helps me to remain objective and independent. For example, I can write about products and services objectively, without relying on sponsorships of any particular company.

Topics I write about aren’t considered to be controversial, so I’m not censored, but I don’t like injustice, and that’s why I’m writing this. What injustice? We’ll get to that, but first, let’s talk a bit about human history and science.

2. History of science, and scientific consensus

What is a scientific consensus? Briefly put (oversimplified): it is an explanation of the world and phenomena that holds water based on the knowledge and information available at the moment. Not every scientist has to agree with the consensus.

Why can this disagreement be beneficial?

There was a time when the consensus was that the Earth is flat.

Likewise, before the Apollo space mission, the consensus on how the Moon came to be was slightly different from our current consensus, which holds water based on all the knowledge and information we have (for) now.

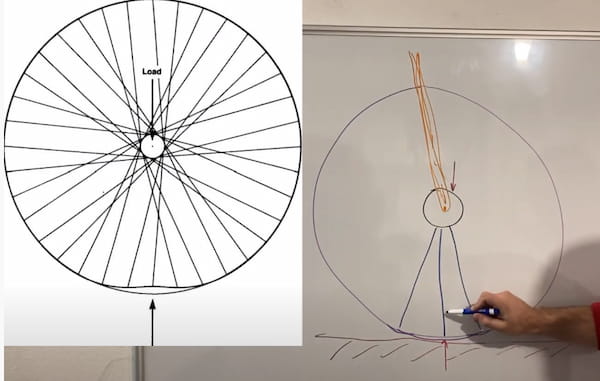

To take a simpler example – does a bicycle wheel’s hub hang on the top spokes, or stand on the bottom spokes?

Picture 1

Until 1981, when Jobst Brandt (engineer, not a scientist) published his book and study called “The Bicycle Wheel,” consensus among the mechanics and engineers was that the hub hangs from the top spokes. I mean, “it’s only logical,” because we know that a spoke can only take tension, and not any compression loads (the spoke load bearing properties are a fact no one disputes).

However, if one understands how pre-tensioned structures work, the illogical (hub standing on spokes, not hanging from them) becomes perfectly logical. This can be confirmed by measuring spoke tensions, and also by using finite element analysis (my video explaining the “Science behind the spokes – and the bicycle wheels“).

At any given time in human history, we thought “this is it!” Only to be proven wrong about many things, with additional knowledge, information, and new theories based on those. I mean, there was a period when people thought that digital watches were a neat idea, just like today most people think that electric cars are awesome. 🙂

At that, throughout our history, a vast majority of people who questioned the contemporary consensus have been wrong. However, thanks to that small percentage of those who got it right, we have many advances in science and technology.

There are ways of testing hypotheses, and disregarding any that don’t hold water. Suppressing the criticism of contemporary consensus, only because the majority of it is wrong, leads to medieval times.

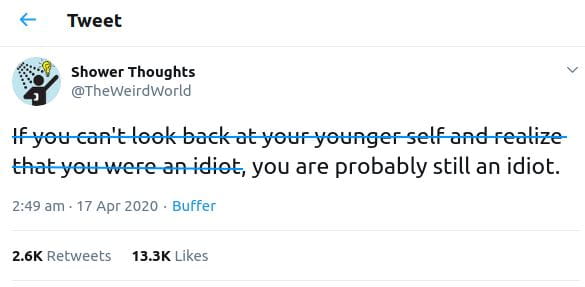

We should allow for opposing ideas, especially when we strongly disagree. And, of course, we should aim to keep our discussions civil and factual. Just, bear in mind:

Picture 2

3. Censoring free speech

As I’ve tried to explain in the previous part of this article, many things we now take for granted have been considered ludicrous before. Likewise, some of the things we now consider to be “a fact” may end up being refuted, as new knowledge and theories based on it come along.

Because of all that, I think that censorship of voicing different opinions and theories is a dangerous and dumbing-down trend. And that is exactly what governments and corporations are (openly, publicly) doing!

Without the freedom of speech (sharing ideas), there’s no freedom of thought. Without the freedom of thought, there is no freedom.

A concrete example is Google’s public announcement that they have stopped AdSense support for websites and YouTube channels that challenge one of the current consensus – climate change in this case (link to the Google’s announcement).

Let’s put aside the hypocrisy of, at the same time, happily sponsoring mind-numbing, spirit-crushing “entertaining” content – this kind of censorship is dangerous.

Of course, they aren’t directly censoring, they are “only” removing any funding – because when you’re hungry, you make better content? 🙂

Yes, I believe (not an expert in those areas) that vaccines are a great feat for minimizing human suffering, the Earth is most probably round, and we are capable of destroying it with our stupidity and shortsightedness. But that’s not the point. What is the point then?

“We are all agreed that your theory is crazy. The question that divides us is whether it is crazy enough to have a chance of being correct.”

– Niels Bohr in a reply to Wolfgang Pauli

- Education, raising awareness, refuting theories that don’t hold water (with good arguments) is good.

- Censorship innevitably leads to dumbing people down – exactly what current censors are allegedly trying to prevent.

Because, among the million stupid ideas and theories, we’ve always been able to strike gold from time to time. In a mountain village without electricity, Nikola Tesla would probably be pronounced to be a crazy idiot, while the villagers would think of themselves as very smart and wise.

99% of the people on this planet (including myself) are complete idiots about 99% of the things that exist. We only have one, or a few areas of competence/expertise. Never forget:

Picture 2 – again! 🙂

That’s why it’s dangerous to give oneself the right to decide which theories and studies can, and which can’t be published.

Is it better to teach a child to tuck and roll, or try to save it from ever falling down?

Is it better to teach people to think critically and educate them, or to try and tell them what to think?

Now they are burning the anti-vaxxers, flat-Earthers and the lot. Will they stop there? Will they be able to tell the difference? Who will stop them when they come for you?

4. Video on critical thinking and Internet information

5. Update: Google Gemini AI

Below is a tweet by Mario Juric (his Twitter profile) that nicely explains the problems with Google (BikeGremlin I/O article link). It is a comment on Google’s blunder (and their PR stunt) with their image generating AI (the Google’s PR stunt: “Gemini image generation got it wrong. We’ll do better.“).

I wrote this article in January 2022 (BikeGremlin publish date archive), but the situation seems to have only gotten worse since then.

I will copy/paste the tweet’s text here, in case it gets removed for whatever reason:

I’m done with

@Google. I know many good individuals working there, but as a company they’ve irrevocably lost my trust. I’m “moving out”. Here’s why: I’ve been reading Google’s Gemini damage control posts. I think they’re simply not telling the truth. For one, their text-only product has the same (if not worse) issues. And second, if you know a bit about how these models are built, you know you don’t get these “incorrect” answers through one-off innocent mistakes. Gemini’s outputs reflect the many, many, FTE-years of labeling efforts, training, fine-tuning, prompt design, QA/verification — all iteratively guided by the team who built it. You can also be certain that before releasing it, many people have tried the product internally, that many demos were given to senior PMs and VPs, that they all thought it was fine, and that they all ultimately signed off on the release. With that prior, the balance of probabilities is strongly against the outputs being an innocent bug — as

@googlepubpolicy is now trying to spin it: Gemini is a product that functions exactly as designed, and an accurate reflection of the values people who built it. Those values appear to include a desire to reshape the world in a specific way that is so strong that it allowed the people involved to rationalize to themselves that it’s not just acceptable but desirable to train their AI to prioritize ideology ahead of giving user the facts. To revise history, to obfuscate the present, and to outright hide information that doesn’t align with the company’s (staff’s) impression of what is “good”. I don’t care if some of that ideology may or may not align with your or my thinking about what would make the world a better place: for anyone with a shred of awareness of human history it should be clear how unbelievably irresponsible it is to build a system that aims to become an authoritative compendium of human knowledge (remember Google’s mission statement?), but which actually prioritizes ideology over facts. History is littered with many who have tried this sort of moral flexibility “for the greater good”; rather than helping, they typically resulted in decades of setbacks (and tens of millions of victims). Setting social irresponsibility aside, in a purely business sense, it is beyond stupid to build a product which will explicitly put your company’s social agenda before the customer’s needs. Think about it: G’s Search — for all its issues — has been perceived as a good tool, because it focused on providing accurate and useful information. Its mission was aligned with the users’ goals (“get me to the correct answer for the stuff I need, and fast!”). That’s why we all use(d) it. I always assumed Google’s AI efforts would follow the pattern, which would transfer over the user base & lock in another 1-2 decade of dominance. But they’ve done the opposite. After Gemini, rather than as a user-centric company, Google will be perceived as an activist organization first — ready to lie to the user to advance their (staff’s) social agenda. That’s huge. Would you hire a personal assistant who openly has an unaligned (and secret — they hide the system prompts) agenda, who you fundamentally can’t trust? Who strongly believes they know better than you? Who you suspect will covertly lie to you (directly or through omission) when your interests diverge? Forget the cookies, ads, privacy issues, or YouTube content moderation; Google just made 50%+ of the population run through this scenario and question the trustworthiness of the core business and the people running it. And not at the typical financial (“they’re fleecing me!”) level, but ideological level (“they hate people like me!”). That’ll be hard to reset, IMHO. What about the future? Take a look at Google’s AI Responsibility Principles (https://ai.google/responsibility/principles/…) and ask yourself what would Search look like if the staff who brought you Gemini was tasked to interpret them & rebuild it accordingly? Would you trust that product? Would you use it? Well, with Google’s promise to include Gemini everywhere, that’s what we’ll be getting (https://technologyreview.com/2024/02/08/1087911/googles-gemini-is-now-in-everything-heres-how-you-can-try-it-out/…). In this brave new world, every time you run a search you’ll be asking yourself “did it tell me the truth, or did it lie, or hide something?”. That’s lethal for a company built around organizing information. And that’s why, as of this weekend, I’ve started divorcing my personal life and taking my information out of the Google ecosystem. It will probably take a ~year (having invested in nearly everything, from Search to Pixel to Assistant to more obscure things like Voice), but has to be done. Still, really, really sad…

Mario Juric

I also wrote about the downsides and dangers of AI.